Did you say you want a distributed rate limiter?

Build a distributed rate limiter backed by Momento.

Rate-limiting is one of my favorite topics in computer science; because it exists literally everywhere. Whether you are looking at your Twitter news feed or streaming a live video, there’s a non-zero chance that you are interacting with a rate-limiter. It is watching you, making decisions about your traffic, and rightfully stopping you if you start making too much noise.

What’s the fuss about rate-limiters?

The need for rate-limiting stems from the fundamental requirement to maintain the health and quality of any service. Without it, resources could easily become overwhelmed, leading to service degradation or outright failure. This is particularly important in distributed systems, web services, and multi-tenant applications where client requests can vary dramatically in volume and frequency. Rate-limiting ensures a fair distribution of resources, prevents abuse, and can even be a crucial component in defending against certain types of cyber-attacks, such as Distributed Denial of Service (DDoS) attacks.

Throttling challenges with stateless rate limiters

One of the common types of rate-limiting is controlling the number of requests that you make to a database from a single host. For instance, if there are 10 hosts and the database can handle a maximum of 1000 queries per second, you can cap the query rate at 100 queries per second for each host. However, while stateless rate limiters offer a straightforward solution, they fall short in dynamic and distributed environments. They operate in isolation, oblivious to the overall system’s state, leading to inefficiencies and potential unfairness. This rigid approach can’t adapt to fluctuating demands or system changes, often resulting in either underutilized resources or unnecessary throttling. As systems grow more complex, the need for a more adaptive, aware, and stateful rate-limiting solution becomes clear. This is where leveraging a service like Momento Cache comes into play, offering the tools necessary to build a smarter, more responsive rate limiter.

Momento to the rescue!

Let’s imagine you want to create a distributed rate-limiter that could effectively manage transactions-per-minute (TPM) for individual users. We venture down this path with two distinct approaches, both leveraging Momento’s capabilities, yet differing in their mechanisms.

The first approach, which we recommend, utilizes Momento’s increment and updateTTL APIs. This method proves to be not only efficient but also highly accurate. It hinges on incrementing a uniquely constructed key for each user and time window, setting a time-to-live (TTL) for the first request of the minute.

/**

* Generates a unique key for a user (baseKey) for the current minute. This key will server as the Momento cache key where we will store the amount of calls that have been made by a user for a given minute.

*/

generateMinuteKey(baseKey: string): string {

const currentDate = new Date();

const currentMinute = currentDate.getMinutes();

return `${baseKey}_${currentMinute}`;

}Requests are allowed until the count hits the predetermined limit, at which point throttling is enforced.

public async isLimitExceeded(id: string): Promise {

const currentMinuteKey = this.generateMinuteKey(id);

// we do not pass a TTL to this; we don't know if the key for this user was present or not

const resp = await this._client.increment(

RATE_LIMITER_CACHE_NAME,

currentMinuteKey

);

if (resp instanceof CacheIncrement.Success) {

if (resp.value() <= this._limit) {

// If the returned value is 1, we know this was the first request in this minute for the given user. So we set the TTL for this minute's key to 60 seconds now.

if (resp.value() === 1) {

await this._client.updateTtl(

RATE_LIMITER_CACHE_NAME,

currentMinuteKey,

RATE_LIMITER_TTL_MILLIS

);

}

// we are below the limit, so we can allow the request

return false;

}

} else if (resp instanceof CacheIncrement.Error) {

throw new Error(resp.message());

}

// the user has exhausted their limit for the current minute

return true;

}The second approach takes cues from traditional methods like those recommended by Redis, relying on get and increment APIs. Although similar in its goal, this method occasionally falls short in accuracy, particularly under high demand, due to its susceptibility to race conditions inherent in the classic read-modify-write operation.

public async isLimitExceeded(id: string): Promise {

const currentMinuteKey = this.generateMinuteKey(id);

// read current limit

const getResp = await this._client.get(

RATE_LIMITER_CACHE_NAME,

currentMinuteKey

);

if (getResp instanceof CacheGet.Hit) {

// cache hit! Check if value is less than limit

if (parseInt(getResp.value(), 10) <= this._limit) {

await this._client.increment(RATE_LIMITER_CACHE_NAME, currentMinuteKey);

// we are below the limit, allow request!

return false;

}

} else if (getResp instanceof CacheGet.Miss) {

// a miss indicates first call to key, so we set TTL now to 1 min

await this._client.increment(

RATE_LIMITER_CACHE_NAME,

currentMinuteKey,

1,

{

ttl: RATE_LIMITER_TTL_MILLIS,

}

);

// first request, we are below the limit, allow request!

return false;

} else if (getResp instanceof CacheGet.Error) {

throw new Error(getResp.message());

}

// the user has exhausted their limit for the current minute

return true;

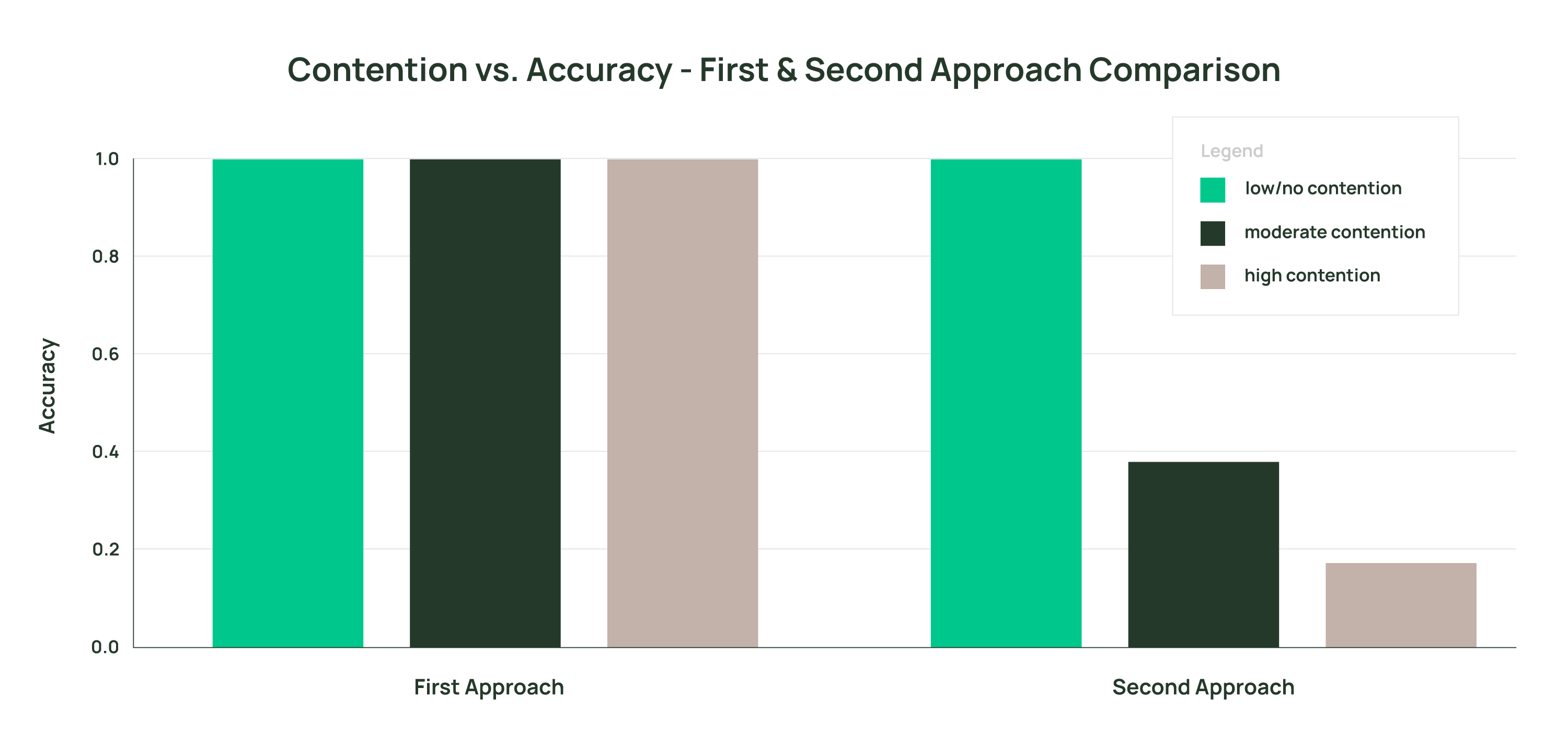

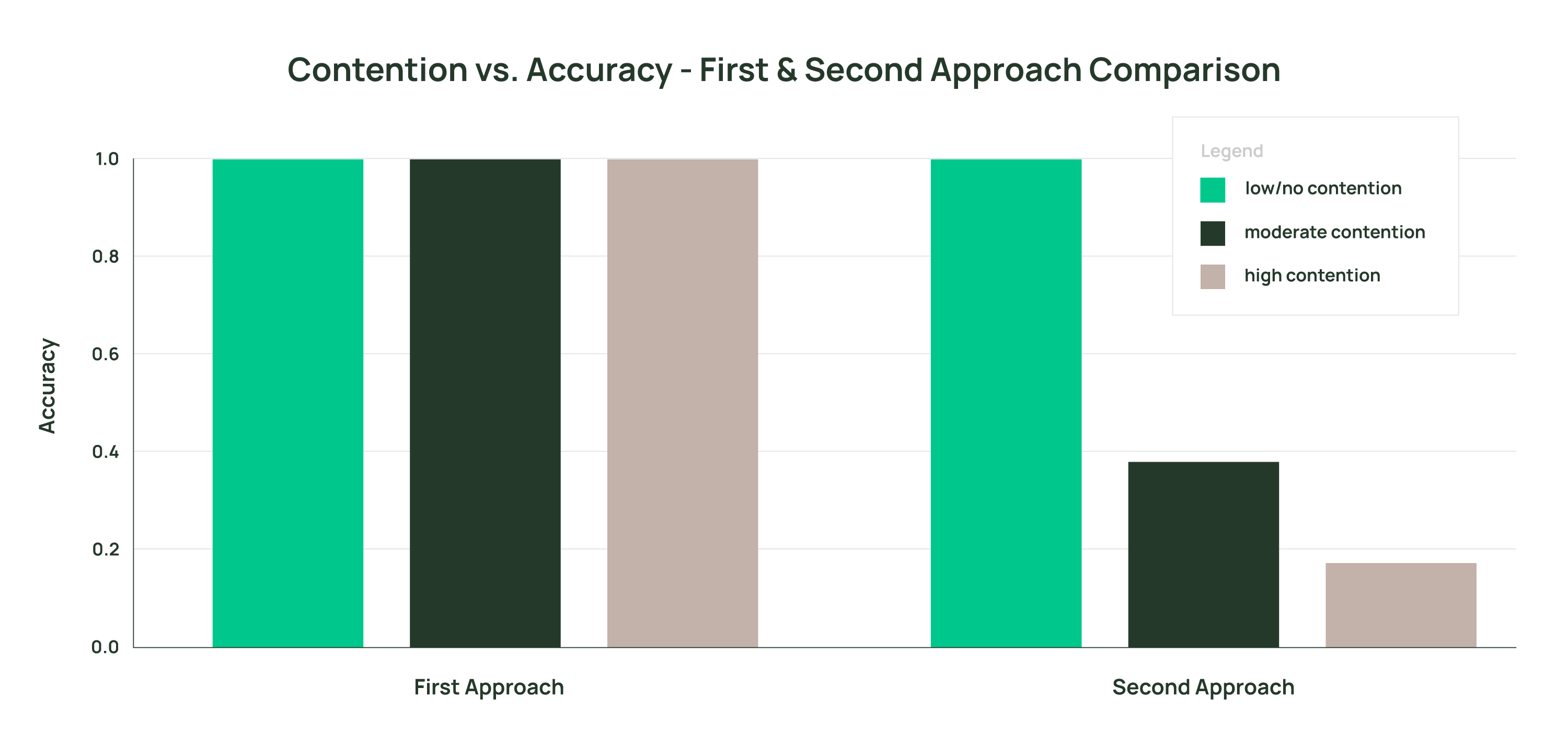

}Our extensive simulations underscore the superiority of the first approach. In scenarios of high contention, it not only maintains perfect accuracy, but also exhibits commendable latency metrics. The second approach, while occasionally exhibiting slightly lower latency, consistently falters in accuracy when contention increases, a trade-off that might not be acceptable in many applications.

Conclusion

The quest for an efficient distributed rate-limiter has led us to a compelling solution backed by Momento. Through rigorous testing, we’ve discovered that leveraging Momento’s increment and updateTTL APIs yields a robust rate-limiter that excels in accuracy and resilience, particularly in high-contention scenarios.