Fighting off faux-serverless bandits with the true definition of serverless

Let’s hold “serverless” services accountable to what that really means.

The term serverless became widely accepted about seven years ago—but its mission has been around for almost two decades. In fact, Simple Queuing Service (SQS) and S3—launched 2004 and 2006—have always been serverless. They enabled rapid experimentation by reducing the cost and complexity associated with provisioned capacity. In the early days of cloud computing, data center providers attempted to hijack the ”cloud” and started applying a ”cloud” sticker to their clearly-not-cloud offerings. We see a similar trend with serverless. The adoption has been so effective that services are applying the serverless sticker on services just to check a box. Misrepresentation of serverless is causing widespread confusion and risks diluting the serverless mission.

Fortunately, the real cloud overcame the threat posed by the slap-on-serverless regimes. Calling out the “cloud washers” and spelling out what made cloud real was pivotal in letting cloud prevail over private data centers. This blog makes a case for the real mission of serverless, outlines what isn’t serverless, and presents a simple litmus test to quickly identify serverless bandits.

What is serverless? And what isn’t?

The serverless mission is to accelerate experimentation and innovation by reducing the barriers to entry (cost, complexity, and time-to-market). When a product is truly serverless, you see developer productivity. Configurations, benchmarking, outages, provisioning… All these distractions are eradicated, allowing developers to simply innovate faster and have little or no infrastructure to maintain. This mission is realized in the core tenets of serverless.

The core tenets of serverless

Simplicity

Serverless has a higher bar for simplicity. No configurations, no delays—and things just work. Traditional server-based customers usually desire more control. This control comes in the form of configurations—ranging from instance sizes to instance families to number of instances to provisioned capacity units, etc. The burden of getting these abstractions right falls entirely on the user instead of the service provider. This does not mean traditional cloud infrastructure is bad—it’s just picking different tradeoffs.

Instant start

Serverless gets developers going with a single API call—and a seamless provisioning experience. There is no waiting for specific instances to wake up, bootstrap, etc. When you provision a bucket in S3, you are not waiting for a cluster to come up before you start sending requests to the bucket. It’s ready immediately.

Instant elasticity

Elasticity is relative. Before the cloud, it was measured in months and weeks to order capacity in the form of physical servers. These days, it ought to be measured in seconds. Serverless really delivers here with capacity that is ready to be deployed without waiting for instances (virtual servers) to come up.

Best practices by default

Serverless bakes in the best practices for configuration, scale, security, etc. At first glance, it often comes across as limitations. However, limitations—albeit frustrating—can actually be good for you. You *can* store a 512MB object in Redis in a map of up to 2 billion items—until one day someone issues a select * from map query in the form of a simple HGETALL. This query works fine—until the large item (or many items) are accidentally inserted into the map. The blast radius here isn’t just the large item. It is potentially every customer who has this query in their critical path. Bugs happen. Guardrails, like limits, reduce the likelihood of these bugs being catastrophic. These limits eliminate distractions that would have manifested as major outages, root causing, and triaging.

Faux-serverless bandit tricks

Provisioned capacity

Serverless does not impose upfront provisioned capacity. While it is okay for it to take a hint on how much capacity may be kept, requiring an upfront capacity commitment to use the service is an unnecessary cognitive and financial toll on the developer. The perils of provisioned capacity go beyond the standard (pay too much if you overprovision, experience an outage if you underprovision, or both). With capacity provisioning comes capacity management, instrumentation, and benchmarking.

This violates all of our tenets. It’s not simple. If you’re forced to provision capacity upfront, you’re waiting for instances to pop up, so it’s not fast. This dedicated capacity cannot scale as fast as a shared pool like DynamoDB, so it’s not instantly elastic. If you’re making decisions about capacity, you’re certainly carrying the burden of sufficient capacity. Make one bad decision, and you have a capacity related outage. That’s not a best practice by default.

Autoscaling with no mention of instant

Let’s face it. Autoscaling is often not that automatic. It’s slow to engage, complicated to tune, and has sharp edges. Scaling capacity means you are managing capacity.

Autoscaling was a boon when it was first announced in 2009. In the modern world of everything serverless, it’s just too slow and complicated. Time-to-scale is the key metric that makes the difference between a load-driven-outage or remaining available through your spikes. Similarly, if the time-to-scale is too long, customers inevitably over provision to compensate for this slowness.

For example, ElastiCache auto-scaling can take tens of minutes and the best practices include never scaling down to shed unused capacity and thus cost. If that was not painful enough, you are only able to scale on one of the two dimensions (CPU and memory). This simply translates into “manually overprovisioned capacity.”

To bring this back to our tenets, this is not simple, and you know you don’t have instant elasticity when your autoscaling takes longer to kick in than the duration of your spike. Service providers get away with it by simply letting you configure the autoscaling rules and posting best practices about not scaling down, etc.—which of course means those best practices are not the default.

Capacity Minimums

Minimum capacity is not a thing with true serverless. Serverless services are cost effective with a low barrier to entry. They have a minimal footprint that costs little or nothing to run at low scale. This scale-to-zero model enables developers to ship a product without worrying about the associated costs of over provisioning capacity. The faux-serverless services often have minimums that range in thousands of dollars of yearly cost. This typically leads to mathematical gymnastics and a huge mental burden on developers, which flies directly in the face of the all-important simplicity tenet.

Instances

How many instances are supporting your DynamoDB table or S3 bucket? Probably many—but what makes these services serverless is that you have no idea and you don’t need to worry about it to be successful. There is no such thing as a serverless instance. If you read this in the docs of a service pretending to be serverless, you are being swindled into capacity management.

Faux serverless services are now getting brazen enough to talk about “serverless instances” in their documentation. Call a spade a spade. Instances are just not instant. They take a while to come up, so if you are working with the “serverless instances” banditry, you know you’re violating the simplicity tenet by telling them how many or the size of the instance. You’re certainly not getting an instant start or elasticity. Instances require care and feed, in the form of configurations. If you’re dealing with instances, you’re dealing with messy configurations that aren’t there by default.

Maintenance windows

Planned downtime has no place in today’s high availability services. Serverless does not have maintenance windows—it works, all the time. Deployments, upgrades, and scaling events happen without you ever knowing about them. That’s what makes them serverless. Core tenet alert: weekly downtime is just not a best practice.

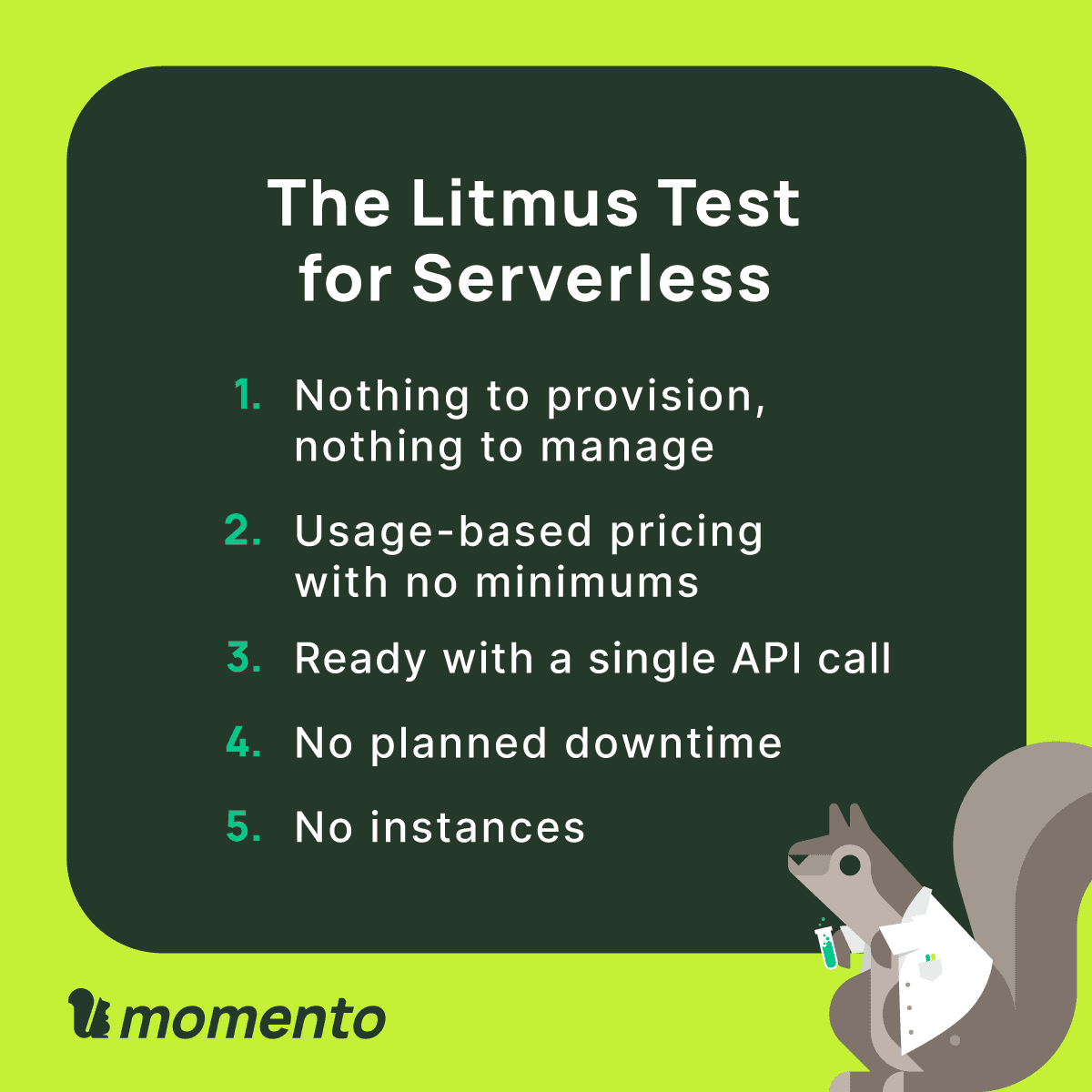

Putting it all together: the Litmus Test for Serverless

Let’s make all of that even simpler. How serverless of us! We present: the Litmus Test for Serverless (LTS).

This test is designed to quickly identify #fauxserverless bandit services. If the service you are evaluating violates any of the aforementioned criteria, you are likely not in serverless land.

Consider an example of Amazon Neptune Serverless against Amazon DynamoDB On-Demand.

Neptune Serverless

- You provision NCUs (Neptune Capacity Units) up front manage their size via autoscaling rules. Violates LTS criterion #1.

- You have a minimum of two-and-a-half NCUs, which ends up costing more than $3500/year ($7000/year if you want a read replica). Violates LTS criterion #2.

- It’s technically available with a single API call (even though there’s some complicated parameters). We’ll give it a pass on LTS criterion #3.

- Neptune Serverless has maintenance windows of 30 minutes per week, during which your updates can start. These updates are not guaranteed to finish within the window, so the impact could be much longer than 30 minutes each week. Violates LTS criterion #4.

- Neptune Serverless has “serverless instances”, which you have to manage and monitor. Violates LTS criterion #5—and #1, actually. These instances are a leaky abstraction and the root cause of the fact that you have to manage capacity, deal with planned downtimes, and have to pay for idle instances (aka servers).

- It’s worth mentioning that every red flag mentioned in the previous section are all over Neptune’s docs.

DynamoDB On-Demand

- No provisioned capacity and no autoscaling rules. LTS criterion #1, check.

- Costs nothing when idle. LTS criterion #2, check.

- Ready with a single API call. LTS criterion #3, check.

- No planned down-time or maintenance windows. LTS criterion #4, check.

- Best of all, no instances! LTS criterion #5, check.

Conclusion

Serverless continues to make a profound impact on the world. We have been on a positive trend towards everything serverless. However, the “everything serverless” future is at risk due to recently launched services masquerading as serverless. Let’s hold the service providers accountable for labeling and promoting services appropriately.

This means, by the way, that we want you to hold us to this standard, too.

Put the Litmus Test for Serverless—to the test! Pick your favorite serverless service and check it against the criteria. What do you find? Does it meet the definition of serverless? If not, where is it falling short? Or go the other direction and have a little fun with it. Pick a service that proudly boasts its serverless-ness even though you know in your bones it’s not serverless, and see how it measures up.