Hokkaido Television Broadcasting unlocks cost savings and performance improvements with Momento Cache

Discover how Momento Cache protects Hokkaido Television Broadcasting from traffic spikes—while letting them maintain a fully serverless architecture.

Industry: Media and Entertainment

Use case: Video Distribution

Hokkaido Television Broadcasting Co., Ltd. is a specified terrestrial basic broadcaster whose broadcasting target area is Hokkaido, and conducts television broadcasting business. In addition, we are also actively working on matters outside of broadcasting, such as video distribution and EC services.

Why was adding a cache necessary?

In conjunction with the broadcast of the popular program “Wednesday Domo” in Hokkaido, distribution of the same program started immediately after its broadcast, but at that time, a spike in access to the program distribution service occurred. A caching mechanism was needed to ensure smooth program distribution even under predicted high loads.

Why did we choose Momento?

Since our service is configured with a full serverless architecture, there was no cache service suitable for this configuration, so we were unable to introduce a cache.

We had hopes that by introducing Momento we would be able to handle access spikes with a serverless configuration. There were also expectations for improved performance.

The results of the implementation were as expected, and we were able to provide video distribution services without any problems even when access spikes occurred. Additionally, the response of the API greatly improved.

Combine Momento with your existing full serverless architecture

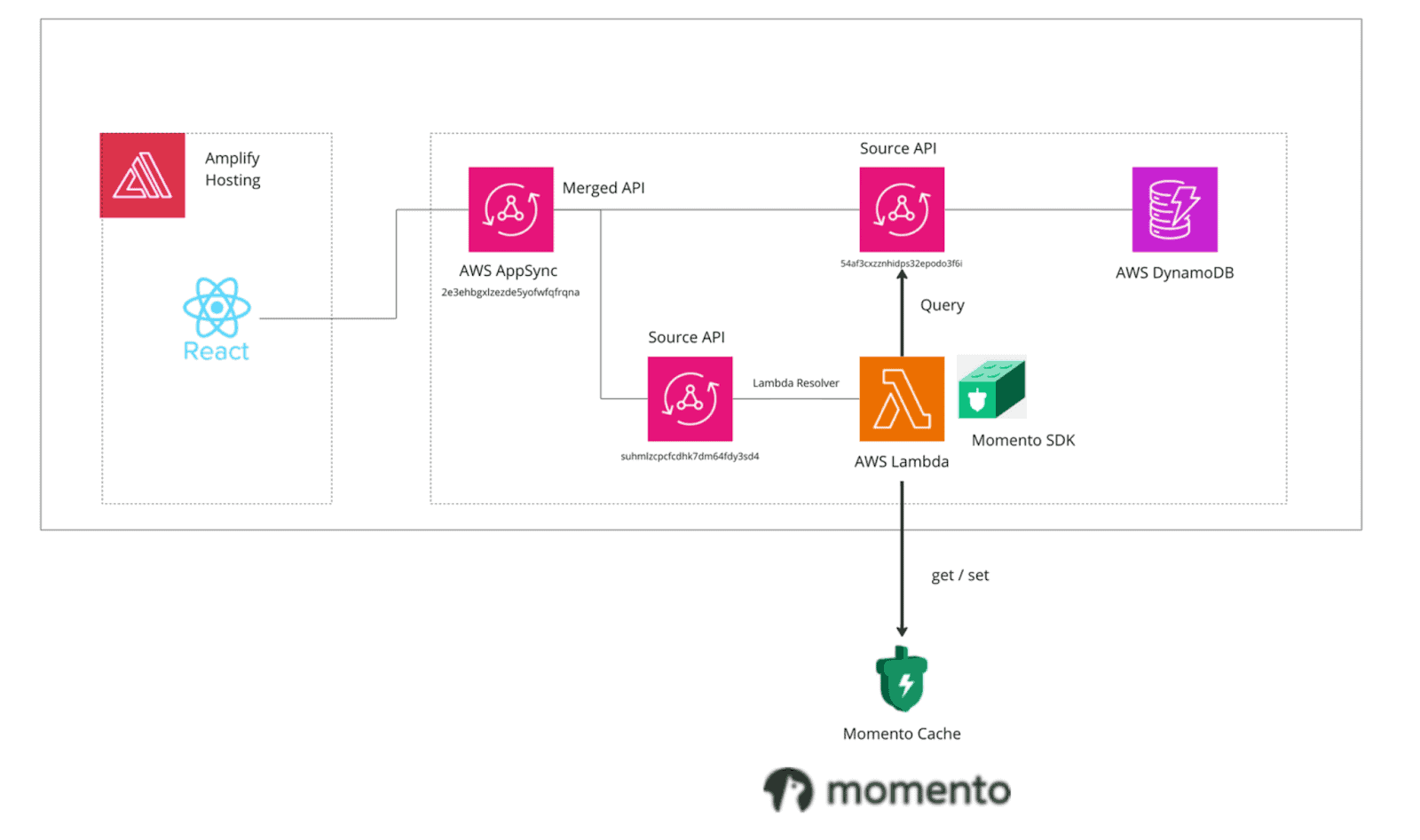

- As backend for frontend, I am building a GraphQL API using AppSync. In the end, we decided to get Momento Cache from Lambda, which is set as the resolver.

- Because we were making large and complex queries with many resolvers against AppSync, AppSync’s TokenConsumed was ballooning to 200-300, so we needed to reduce this value to a single digit. We also needed to avoid getting caught in DynamoDB’s hot partition.

- In order to find a solution, we realized that we needed to fundamentally review the backend NoSQL design, but at this point, we were less than two months away from production, so we didn’t have time to redesign it. We decided to do a different design so we could offload AppSync’s token consumption.

- First, I tried enabling AppSync’s built-in cache, and by setting a cache for each resolver, I was able to take measures against DyanamoDB’s hot partition, but I needed to find a way to improve the settings for TokenConsumed. I couldn’t do it.

- Additionally, since AppSync’s cache is a time-based service based on ElastiCache, we determined that it would be difficult to use it all the time due to budget considerations.

- By leveraging AppSync’s Merged API, we can add functionality to only part of the backend by switching to AppSync, which retrieves the Momento data that stores the data retrieved by this particular large query. We were able to respond without affecting others.

Time taken for production deployment

It took one week to think about the design. Then, it took about a week to conduct the load test in the development environment, and another week until we added error handling and deployd to the production environment. From scratch, we were able to deploy a production environment in a total of three weeks.

How to improve performance and reduce costs of Momento in production environments

- In terms of performance improvement, it used to take around 2 seconds, on average, to retrieve data using AppSync’s DynamoDB resolver, but by changing to retrieving data from Momento via the Lambda resolver, the time was reduced to around 500ms.

- Regarding costs, in the case of a cache that charges by the hour, we considered minimizing costs by focusing on deploying only at predicted timings, but this method would not be able to handle unexpected spikes in access. Momento, on the other hand, is always available and has a pay-as-you-go setup.

Future with Momento

- I’m very happy that by adopting Momento, we can now think about caching strategies for the entire architecture, including the front end, even without a server. I look forward to future support for things like ensuring eventual consistency and strong consistency when setting new data, as this will reduce the need for implementation.

- Regarding access to Momento from the front end, we decided not to adopt it this time due to time constraints, but since the Web SDK was also very easy to implement, we will plan to refactor it in the future. I would like to think about where to use it.

Reduce costs and improve performance for video distribution with Momento Cache. Sign up now to try it out.

Don’t Miss Our Latest News

Subscribe to our newsletter and get all the news from Momento.

No spam.