How we built Momento Topics, a serverless messaging service

Let’s take a look at the architecture that makes up Momento Topics, a serverless messaging service.

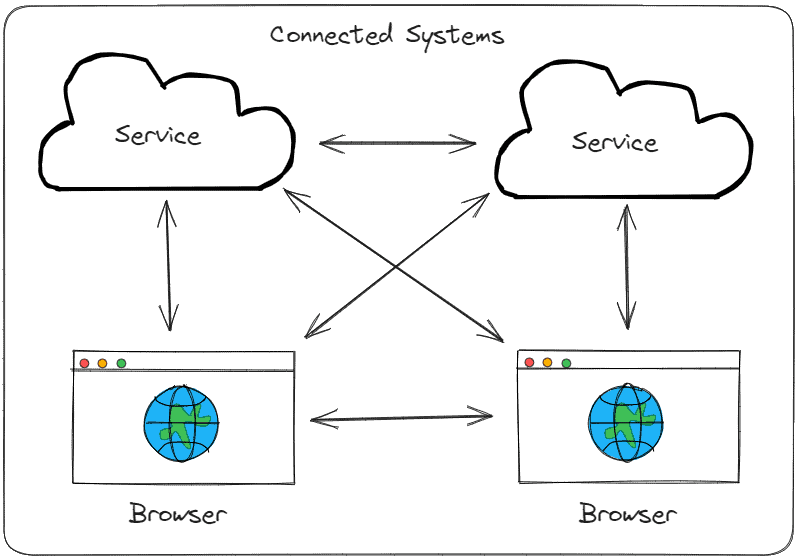

A few months ago, Momento released Topics, a fully-managed serverless messaging service. This service aims to connect everything. You can connect backend service to backend service, backend service to user interface, even user interface to user interface with literally two API calls 👇

// sends a message containing “hello world!”

await topicClient.publish("tutorials", "greetings", "hello world!");And to receive the message:

await topicClient.subscribe("tutorials", "greetings", {

onItem: (message) => console.log(message.valueString())

});No resource management, no configuration, just code and go!

Services like Amazon SQS, SNS, or Kinesis are also serverless event messaging services, but they focus on sending and receiving events between backend components.

On the flip side, services like Pusher, Amazon AppSync, and AWS IoT Core are intended to be used like WebSockets, connecting backend services to user interfaces. These are fantastic services that abstract away many of the complexities of WebSockets, but we at Momento thought we could continue to improve the developer experience for building real-time communications.

So we built Topics, the dead-simple service that enabled everything to communicate with everything… if you want it to.

This highly scalable service is ready for prime time, boasting incredibly low latencies at millions of operations per second (ops/s).

Don’t believe us? Let’s take a look at how it was built and what makes this service shine.

Events and Subscriptions

Let’s first talk about what’s going on when you publish and subscribe for information to Momento Topics via a topic. Think about a topic as a focused form of communication between publishers and subscribers, like a dedicated chat room.

When your user interface or backend service subscribes to a topic, it’s telling Momento it wants to be notified when something happens. Specifically, it wants to know when someone publishes, or sends, an event to a topic. Use cases for subscribing could be alerting end users when a teammate signs on or when you receive an instant message.

With Momento Topics, a subscription is a long-lived gRPC connection between the subscriber and the Momento servers. You could think of this as setting up a phone call. Your user interface calls the Topics service and now has an open connection with it, allowing data to instantly transfer directly between the two.

This differs from something like Amazon SNS because SNS does not maintain active connections. Instead, subscribers to SNS would be added to a phone book and the service would know who to call when an event occurs. This results in higher latency but does offer stateless communication. Stateless communication works great when real-time isn’t a requirement, like when you need to send an email or add something to a queue. It’s totally acceptable to have the higher latency in these situations due to the async nature of the workflow.

Topics differs from other WebSocket services due to the transfer protocol. A standard WebSocket connection will transfer data over ws or wss. IoT devices typically transfer data via MQTT. But since Momento Topics uses gRPC, that means your data is being transferred over HTTP. Let’s look at some of the key differences between these protocols:

WSS

- Pros: Offers full-duplex (simultaneous two-way) communication. Provides low bandwidth overhead by not requiring headers and metadata in requests. Widely supported in browsers and server environments

- Cons: Can be complex to set up and manage connections. Consumes a high amount of server resources due to connection management.

MQTT

- Pros: Optimized for low-bandwidth, high-latency, or unreliable networks. Offers various levels of message delivery guarantees. Provides last will and testament (LWT).

- Cons: Not natively supported in web browsers. Has higher latencies compared to wss because of additional overhead (keep-alive mechanism)

HTTP (gRPC)

- Pros: Uses HTTP/2, bringing multiplexing and header compression for faster performance. Enables bidirectional streaming.

- Cons: Uses protocol buffers for serialization instead of JSON or XML. Might not be as familiar for integrators as REST.

Events, on the other hand, need little explanation. The best way I’ve heard event-driven architectures described was from Eric Johnson at Momento’s conference, MoCon. He said “something happened… And we react.” An event is that “something”.

An event can be represented by anything. It can be a boolean, an entity identifier, an entire JSON object, heck, it could even be a photo. With Momento Topics, you provide a byte array as the payload of your event, meaning the possibilities are literally endless. All subscribers will receive the same payload you provide, allowing them to react to the event appropriately.

Now that we understand what subscriptions are and what they are receiving, let’s talk about the architecture.

Service architecture

For me, the biggest reason to use a serverless messaging is the infrastructure management provided by the vendor. I’m not a sysadmin, I’m an app builder. I don’t know (nor do I want to know) how to load balance connections, set up auto scaling groups, or how to calculate utilization rates and manage spiky workloads.

What I do know is how to provide business value to an application. That’s what I want to spend my time and energy on, not worrying about infrastructure. Luckily for us all, the engineers at Momento know and care deeply about infrastructure. Here’s a peek at how they built Topics to provide serverless capabilities like on-demand scaling, pay for what you use, no scheduled downtime, and no resource provisioning.

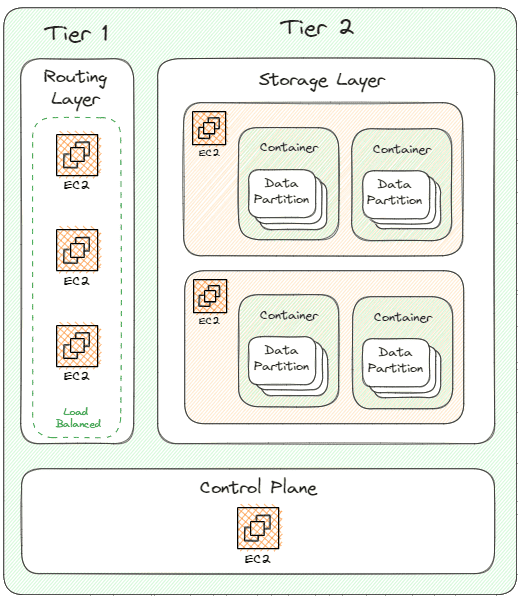

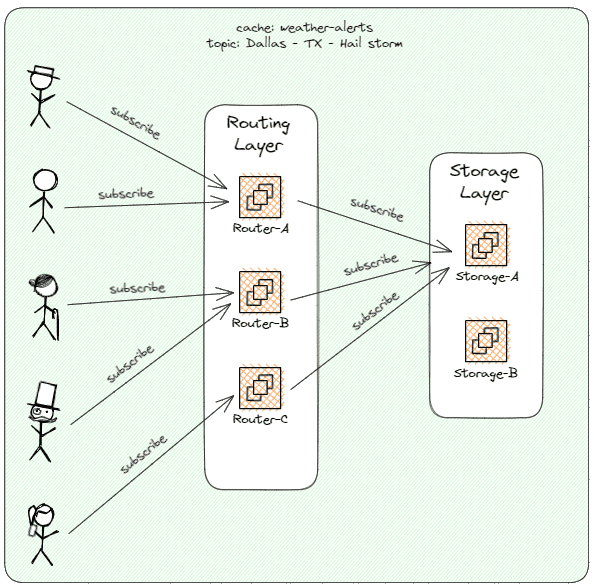

Topics is built on a 2-tier architecture, allowing it to fan-out 1000x faster and better than managing connections yourself. The two tiers are the routing layer and storage layer.

Routing Layer

Tier 1 is the routing layer. This layer has a full topographical map of the Momento ecosystem in memory. It’s responsible for fielding requests from the SDKs, managing connections to subscribers, and calculating which tier 2 node holds the topic messages (more on this in a minute).

This layer is an autoscaled fleet of high throughput EC2 instances. The Topics service uses a round robin load balancing pattern to manage incoming subscriptions. When capacity nears a specific threshold, the Control Plane (the brains behind Momento), will warm up another instance, pass the ecosystem topography to it, then add it into the mix.

Storage Layer

Tier 2 is the storage layer. When events are published to a topic, they will land here and be distributed out to connected routing layer nodes.

The neat part of the architecture is in this layer. The storage node only communicates with routing layer nodes, and the routing layer nodes communicate with subscribers. When a new subscription is added, the designated routing node will calculate which storage node owns the topic and establish a long-lived gRPC connection with it.

This is the exact same pattern the router is doing with end user subscriptions. So the Momento servers are subscribing with other Momento servers and reusing connections whenever possible. Let’s look at an example.

In our example above, we have 3 routing nodes and 2 storage nodes. We have 5 end users all subscribing to the “Dallas – TX – Hail storm” topic in the “weather-alerts” cache. Through a deterministic hash, it’s determined that topic messages will live on Storage-A

As the connections come in, the requests are being evenly distributed across Router-A, Router-B, and Router-C. On our fourth subscriber, Router-A determines it needs to connect to Storage-A but knows it already has a connection open with it, so the connection is reused!

The same thing occurs for subscriber #5 – it reuses the existing connection it has with the storage node. When a message is published to a topic, the storage node will broadcast it to its open connections, then the routing nodes will do the same and broadcast to their open connections to subscribers.

This allows for fewer connections and a highly scalable fan-out pattern, resulting in blazingly fast message distribution and an elastically scalable experience for developers.

Determining topic location

In the initial conversations before building the Topics service, it was agreed upon that developers would never have to create topic resources before using them. The team felt it took away from the developer experience and was an unnecessary part of event messaging. The goal of the service was to take on as much of the undifferentiated heavy lifting as possible, leaving application developers with one focus – solving business problems.

So instead of creating topic resources and assigning them a storage location on a server, the team opted to use a rendezvous hash using the subscriber’s account id, cache name, and topic name to dynamically and definitively point to a storage node.

This means as routing nodes are shuffled in and out of the load balancer, they don’t need to maintain state of which topics exist and where they live, they can calculate the location in microseconds and route appropriately, leaving the service more dynamic and resilient as a result.

Summary

Topics runs on the same hardware as our caching service. It’s been intentionally designed to be low latency, highly scalable, and ready at a moment’s notice.

Using the 2 tier architecture allows us to fan-out subscriptions and provide you with low latency message delivery, no matter how many subscribers you have. This type of architecture is what differentiates Momento Topics from other events messaging services. Many other services have the capability to run this way, but most of them don’t manage it for you.

Momento does. This means you can sleep at night knowing you won’t receive a call at 3am stating your messages aren’t being delivered. That’s on us.

Remember that subscriptions are gRPC connections, they are like phone calls. A phone call is stateful, meaning both you and the person you’re calling have to be on the line for it to work. This means trying to subscribe in a serverless function like Lambda or a Cloudflare worker won’t work. When function execution is over, your code is put on pause. This means your phone call will be put on hold and eventually disconnect. Use it in stateful environments like a browser, container, or server.

That said, you can publish from anywhere. Publishing data does not set up a connection, so feel free to use it in all your functions.

We want you to be successful with Topics. Let us know if you have any questions, comments, or concerns. We believe in open architecture, so if you want to dig in a little more in something, reach out! We are happy to share how it works.

You can reach the team directly through the website. We look forward to hearing from you and seeing what you build!

Happy coding!