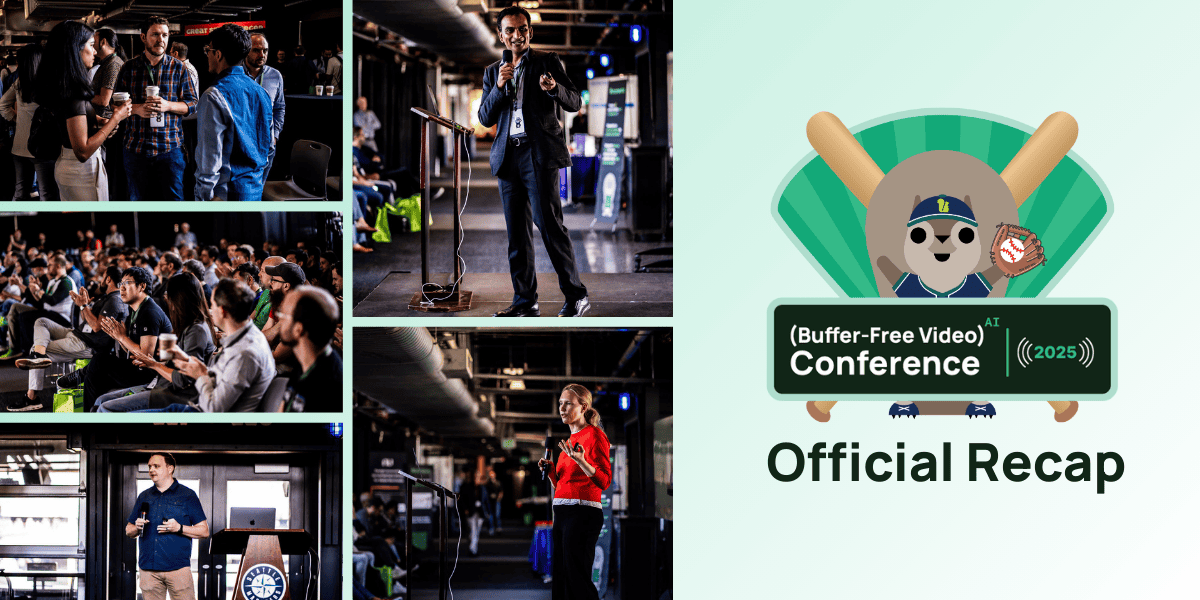

MoCon 2023: A Day of Inspiration and Innovation

Relive MoCon and gear up for what's next in the world of serverless and AI

On August 3rd, Momento put on our very first conference – MoCon. We brought some of the greatest minds in serverless, AI, and caching together for a day and let me just say…WOW.

The event was held at T-Mobile Park in Seattle, WA on an absolutely perfect day. 70 degrees (21C for non-Americans), sunny skies, and an electric buzz in the air with excitement and vigor. Couldn’t imagine a better possible day to be hanging out at a baseball field with some incredibly smart people.

Doors opened at 8am and a tidal wave of excited attendees poured through the door making a beeline for the coffee and breakfast. Once everyone was settled, Momento co-founders Khawaja Shams and Daniela Miao hit the stage to start the conference off with a bang.

Opening remarks

Daniela and Khawaja walked through the history of Momento, explaining “why caching” and how being truly serverless has driven the motivation behind so many product decisions. They weaved the story of how Momento Topics came to be when they noticed developers spending too much time managing subscriptions, connections, and other details necessary to run event messaging systems.

The conversation casually drifted to understanding the problems in today’s world. What are developers spending time on that they shouldn’t be? What burdens can Momento take off devs and leave them 10x as productive?

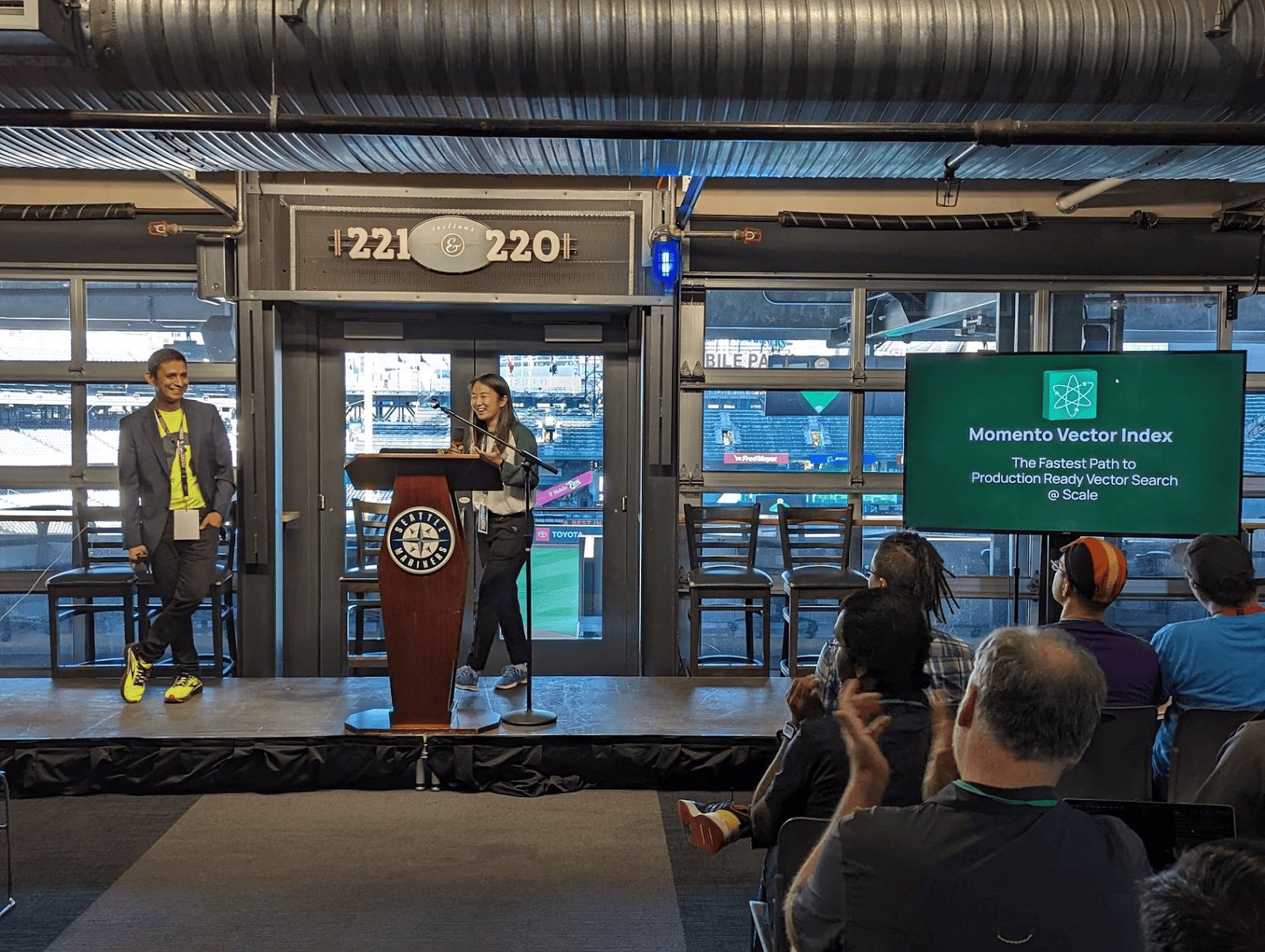

That’s when Daniela and Khawaja hit the audience with a huge announcement: the Momento Vector Index!

The third serverless service in the Momento arsenal (with Cache and Topics being the other two), the Momento Vector Index is poised to abstract away management of your vector databases. It takes care of the schema and dimension management for you, providing end users with two simple calls: add to index and search.

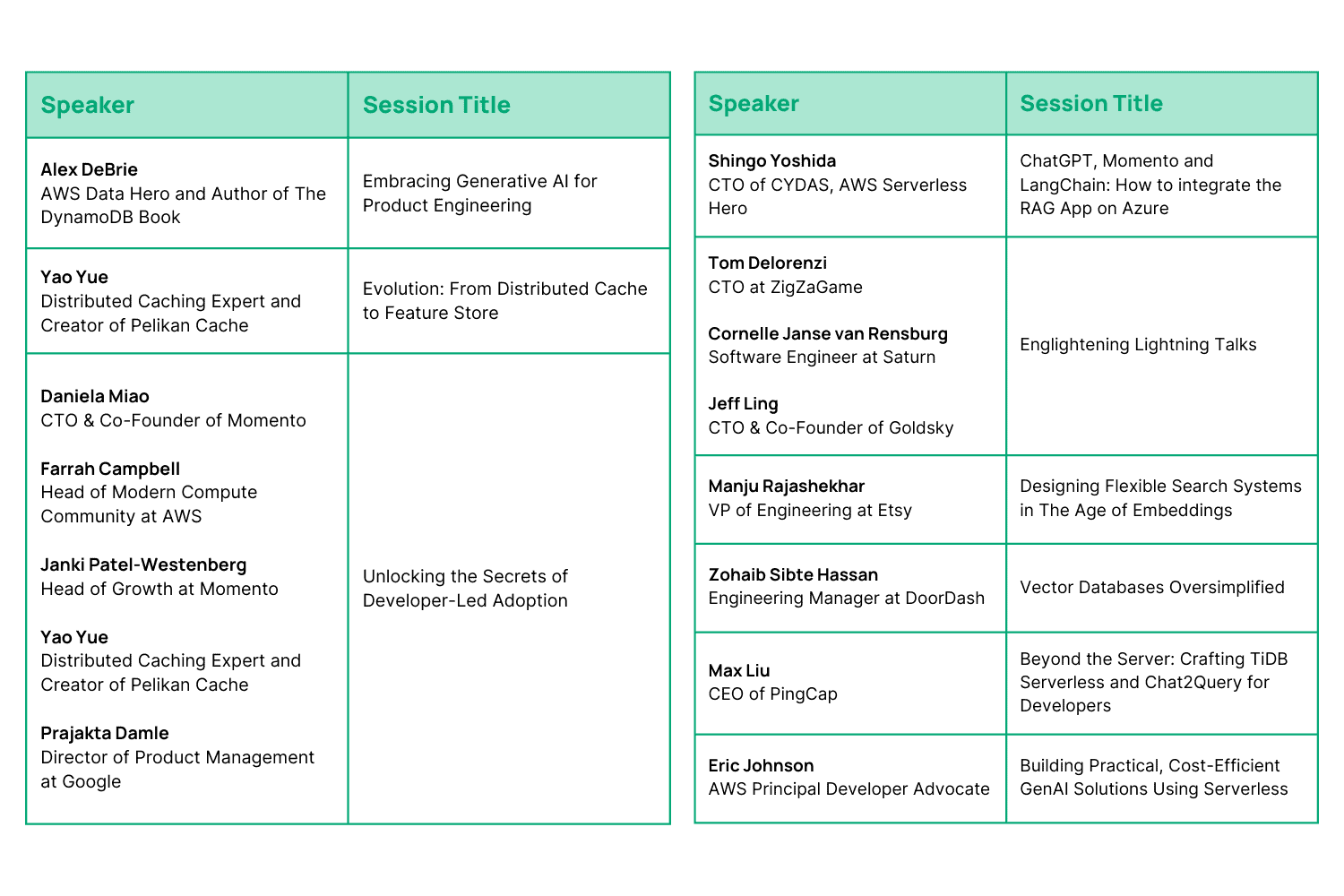

Sessions

As popular as AI is in the tech world right now, there’s a lot of mystery around it. The majority of the sessions at the event were focused on generative AI, vector databases, and embeddings. It was a touch of “how to use AI better” with a heavy emphasis on “how AI works”, which is incredibly fascinating.

The keynote presentation was given by Alex Debrie and was as funny as it was educational. Alex broke down the major components of generative AI and explained how all the pieces fit together. He literally and figuratively set the stage for the rest of the day.

Instead of summarizing the remainder of the sessions of the day, I’ll drop the link to the session list, which recaps them better than I ever could. Even better, we recorded every session—and they’re now all available over on our YouTube channel.

Non-session mischief

When sessions weren’t….in session… there was plenty to see, do, and talk to around the conference. First of all, there was a QR code scavenger hunt for attendees to chase down. We’d go around looking for codes on the walls, floor, and ceiling trying to get on top of the “who found the most” leaderboard.

Naturally, there was one code hidden on a scrolling digital board that drove people crazy. I think I got more “where is the last QR code” questions than anything else. It was hilarious as people hurried by me muttering “I’ve already scanned that one…” to themselves.

Besides that, we got to run around the bases on the field, which is quite the experience! We talked about creative caching use cases (so many new ones I never heard of!), played a racing game built on Momento Topics, and threw down some cornhole.

By the numbers

Who doesn’t love some good ol’ fashioned numbers? Let’s take a look at some stats:

- 160 attendees (including 5 AWS heroes)

- 75 companies represented

- 11 sessions

- 1 service launch

- 412 QR codes scanned

To say this was a resounding success would be an understatement. The participation, feedback, attendees, and execution of the event far exceeded what you’d expect from a 2 year old startup running their first conference. I owe a tremendous THANK YOU to everyone at Momento, all our conference attendees, and everyone in the tech community who engaged with us on social media. ❤️

What’s next?

So, where do we go from here? This was Momento’s first community event, and to have the turnout we did was as heartwarming as it was exciting. We love the community, both virtual and IRL, and we’re going to do our darndest to help as many developers learn and grow as we can.

With that in mind, we’ll be starting a series of in-person meetups we’re calling Mo Meetups. Aimed at helping builders do what they do best, we’re leading workshops around the US between now and AWS re:Invent. Watch our Twitter or LinkedIn for more details and where we’ll be in the upcoming months.

If you’re attending AWS re:Invent, we’ll see you there! We’ll bring more exciting games, announcements, and mind-bending use cases with us.

If you’re feeling the FOMO from MoCon, you’re in luck! As I mentioned earlier, the sessions are all recorded. We have big plans for MoCon as an annual event, so if you want to attend in person but couldn’t this year, we’ll see you in 2024!

Happy coding!