Cloud architectures are progressing to serverless first designs with services including serverless databases, serverless event driven functions, and microservices to effortlessly meet your customers’ demands. Amazon S3 was one of the first serverless cloud services released all the way back in 2006, it fits the definition of serverless by giving you fast and easy access to object storage without any servers to manage or scale. In 2012 Amazon DynamoDB was released, and has changed the game with its ease of use and ability to serve massive scale applications.

Simply put, old “serverful” database processes and management have trouble keeping up with the level of performance and complexity of scaling that is required of today’s cloud scale applications. As a result data models are transitioning to serverless across relational, key value, document, graph, or time-series data stores. Developers want to access their data through APIs, and don’t want to have to manage a database cluster even if it’s via a managed service.

The launch of AWS Lambda in 2015, brought serverless into the mainstream and fomented serverless as a common architecture practice. Serverless or event-driven functions such as AWS Lambda or Google Cloud Functions automates your compute for only specified events and actions, and eliminates your time spent on infrastructure management and operations.

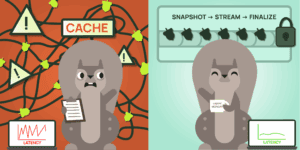

With the rise of Functions-as-a-service (FaaS), the gold standard serverless stack in the AWS ecosystem consists of the combination of Amazon API gateway, AWS Lambda, and Amazon DynamoDB or Amazon Aurora Serverless V2. In the Google Cloud ecosystem the equivalent stack is Google Cloud API Gateway, Google Cloud Functions, and Google Cloud Datastore. But there is a missing piece in both of these stacks: a serverless cache. A powerful and serverless database service such as Amazon DynamoDB or Google Cloud Datastore deserves to be paired with an equally powerful serverless cache.

A managed cache service where you provision and manage nodes via a managed cluster is the preferred model of yesteryear. Today, developers want a serverless—where they simply consume their data model via APIs and there is no infrastructure or database to manage.

To date, there have been various hacks and workarounds to serverless caching but there was no straightforward solution. The legacy caching services are all cluster-based and do not come with the ability to truly autoscale. One of the challenges of scaling traditional caches is that a typical cache node will have a fixed max client connection rate relative to total cache size. This setup leads to overprovisioning of caches and becomes a weak spot if client connections can’t meet bandwidth demands, resulting in outages in the worst case scenarios.

You can finally fill this gap and go fully serverless with Momento Cache. Momento is the world’s first genuinely serverless cache that automatically adjusts to handle traffic bursts, cache hit rates, and tail latencies. Momento Cache automatically manages all your hot keys, shards, replicas, and nodes which assists in keeping your applications up and highly responsive at any scale—all in a serverless architecture that allows you to deploy your cache in minutes (instead of weeks of wasted testing and overprovisioning inefficient cluster-based services). Choose your cloud and accelerate your other serverless tools such as Amazon DynamoDB, AWS Lambda, Amazon Aurora Serverless v2, Google Cloud Functions, and Google Cloud Datastore.

Randy Preval

Randy Preval