The 5 Metrics that Predict Cache Outages

Learn the 5 critical metrics that predict cache performance issues before they impact users. From P999 latency to cache miss rates - learn what actually matters in production.

When we built Momento, we had to answer a fundamental question: what metrics actually predict problems in production caching infrastructure?

Not the vanity metrics. Not the ones that look good on dashboards. The ones that wake you up at 2 AM because your database is melting or your P999 latency just spiked to 500ms.

After running caching infrastructure at scale, here are the five metrics we obsess over internally and what to watch for.

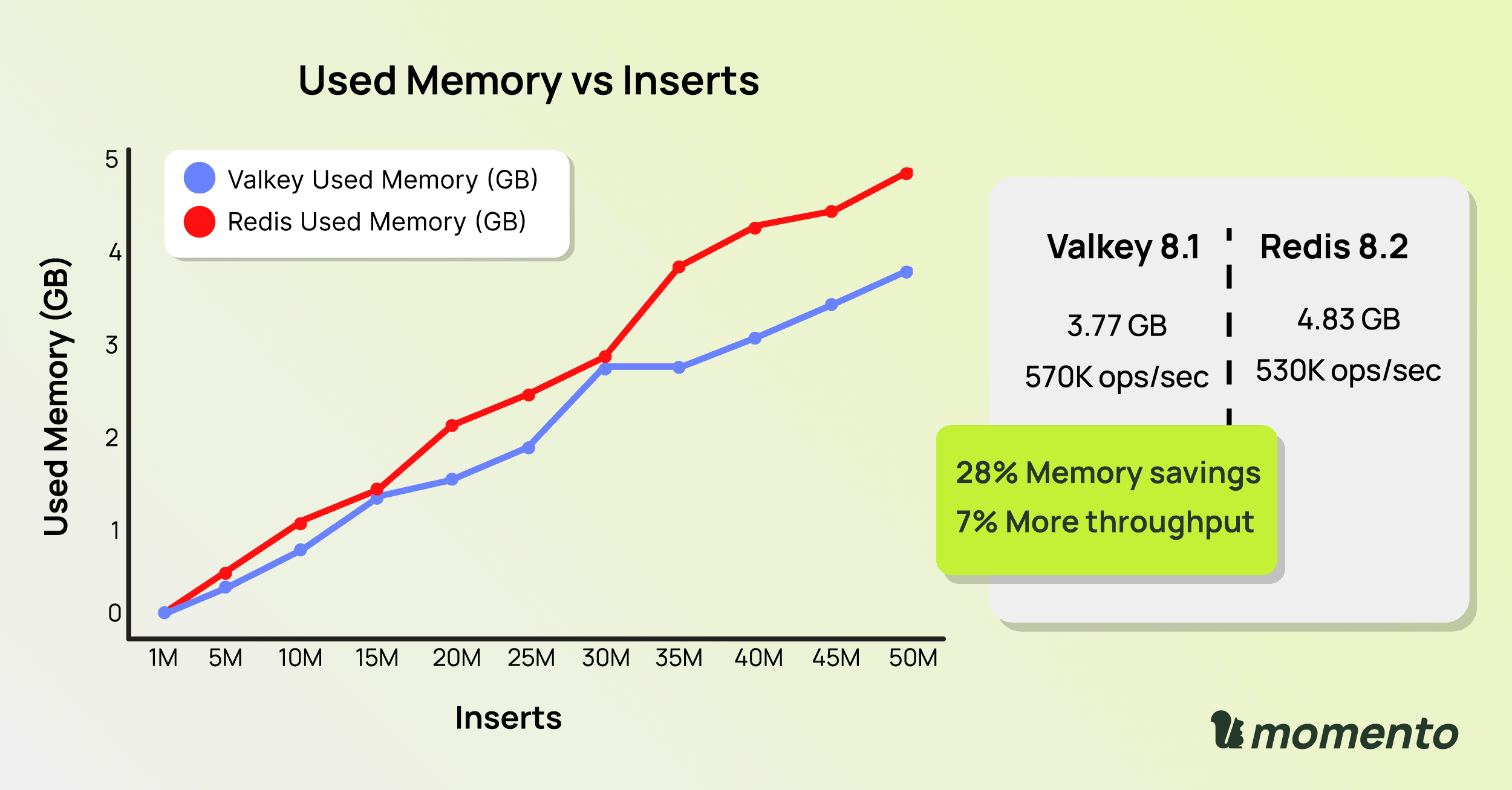

Memory Utilization and Eviction Rate

Everyone monitors used_memory against maxmemory. But the metric that actually matters is your eviction rate.

When evictions start climbing, you’re fundamentally changing your cache’s behavior. Keys applications expect are getting dropped. Hit rates collapse. Database load spikes. Tail latencies explode from sub-millisecond to hundreds of milliseconds.

At Momento, we don’t let our storage nodes run at 95% memory utilization. That’s not “efficient capacity planning,” that’s a production incident waiting to happen. One traffic spike at 95% utilization means aggressive eviction, and aggressive eviction means your cache stops being a cache.

Watch for: Track evicted_keys metric – any sustained evictions above baseline means you need more capacity now.

Connection Churn

Many teams track connected_clients and move on. The real signal is connection churn – how many new connections you’re creating per second.

We’ve debugged this scenario dozens of times: new code deploys, connection counts look stable, then two hours later the cluster fails. The new code wasn’t pooling connections properly – creating and destroying thousands per second.

Watch for: Track total_connections_received and monitor the rate. Under steady traffic, you should see near-zero connection creation. If you’re creating dozens per second, your connection pooling is broken.

P999 Latency

P99 tells you what the slowest 1% of requests look like. P999 tells you what the slowest 0.1% look like. For a service handling 100,000 requests per second, P999 represents 100 requests every second. That’s not a tail – that’s a user experience problem at scale.

The gap between P99 and P999 is revealing. If P99 is 2ms but P999 is 80ms, you’ve got resource contention or network issues affecting a subset of requests.

Sub-millisecond P999 latency isn’t a nice-to-have – it’s the entire value proposition of caching for most production workloads. If your cache adds 50ms to even 0.1% of requests, it’s not making your application faster. It’s making it unpredictable. And unpredictable latency is worse than consistently slow latency.

Our internal SLA targets P999, not P99, because that’s where real systems break.

Watch for: P999 spiking while P99 stays flat (means a small percentage of requests are getting hammered by slow commands or resource contention).

Cache Miss Rate

Most dashboards show cache hit rate. We track cache miss rate (CMR).

This isn’t semantics, it’s a mental model shift. Cache hit rate dropping from 99% to 98% sounds trivial. A 1% drop seems fine, right? But reframe it: your cache miss rate just doubled from 1% to 2%. That’s a 100% increase in requests hitting your database.

We’ve seen production systems with 98% hit rates crashing down because the 2% of misses were expensive queries – 500ms database calls that overwhelmed backends the moment miss rates spiked.

Momento obsesses over cache miss rate spikes because they directly correlate to database load spikes. A cold cache, a botched deployment, a scale event – they all cause CMR to spike.

You need to track miss rate and miss latency together. If your misses cost 10-20ms, a 5% miss rate might be acceptable. If your misses cost 500ms, you need 99.5%+ hit rates or you need to rethink what you’re caching.

Watch for: CMR spikes during deployments, scaling events, or node failures.

Replication Lag

If you’re running Valkey with replication, lag between primary and replica is your early warning system.

Sustained lag above 1-2 seconds means something’s breaking: network saturation, write throughput exceeding replication capacity, or overloaded replicas.

We treat replication lag as a capacity planning signal. When lag trends upward, we add capacity or rebalance load before it cascades into failover problems.

Watch for: Monitor the difference between master_repl_offset and slave_repl_offset. Sustained lag above 1-2 seconds or unbounded growth means you need to act.

What We’ve Learned

Every metric here is available in open source Valkey right now. But knowing what to monitor is only 10% of the problem. Building systems that automatically respond to these signals – that warm nodes during deployments, that scale before CMR spikes, that maintain sub-millisecond P999 under load – that’s the other 90%.

The difference between a team that gets paged at 2 AM and a team that sleeps through the night isn’t luck. It’s picking the right metrics and treating them as predictive signals, not reactive alarms.

Start with these five. Establish baselines. Set alerts on rate-of-change. When those alerts fire, you’ll know exactly what’s breaking before your users do.