How we turned up the heat on Node.js Lambda cold starts

We reduced a customer’s Lambda cold starts by 90%—and then did the same for ourselves!

A customer was writing their first Node.js Lambda function using Momento. They reported cold start times of over 1 second for a simple function that just made one Momento API call.

Unacceptable! We decided to dive in and investigate… Here were our initial observations about their Lambda environment:

- The only thing this Lambda function was doing was making a single grpc call to Momento and returning the response. The entire Lambda function was only around 25 lines of code.

- Built and bundled using Webpack

- Deployed using the サーバーレス framework

- It had a single dependency on the @gomomento/sdk

- Original bundle size was 1.5mb

- Cold start times were ~1000ms

- Lambda function was configured with 768mb of memory

Our goals with this deep dive into Lambda cold starts

We wanted to accomplish the following from our investigation:

- Minimize Lambda package size

- Minimize Lambda cold start time

- Find balance between Lambda mb size and billed duration/total cost

The first thing we tried to improve cold start times was to adjust the Lambda memory size. We tested memory configurations of 256, 512, and 1024, but cold start times did not change in any meaningful way.

Next, we tried using the serverless-esbuild plugin. We use esbuild internally to build our Node.js Lambda functions, and have had good results. We didn’t have any experience with this plugin, but thought it would be worth a shot.

npm i --save-dev serverless-esbuildAnd then appended these lines to their esbuild file:

plugins:

- serverless-esbuild

custom:

esbuild:

bundle: true

minify: true

packager: 'npm'

target: 'node18'

watch:

# anymatch-compatible definition ()

pattern: ['src/**/*.js'] #This simple optimization reduced the bundle size from 1.5mb → ~260kb. When deployed with the same 768mb of reserved memory, the cold start time went down to ~100ms. That’s a 90% reduction! :w00t: The customer was extremely happy with the result, and took this knowledge and used it to reduce another Node.js Lambda function they had from 260kb → 42kb.

This got us thinking: What was this magical plugin doing, and how could we do something similar to bundle Node.js Lambda functions to reduce our own cold start times?

Momento Node.js Lambda functions

As stated above, we do not use the serverless framework here at Momento. Although it would be valid to start using the serverless framework to build and bundle our Lambda functions and continue using CDK to deploy them, we wanted to see if we could get similar improvements without adding a new build dependency.

I chose to perform this experiment with a Lambda function that we are actively using in production in order to have a real-world example. Using a test Lambda function with no/limited dependencies is not a fair comparison. If the solution we come out with only works with Lambda functions with no dependencies, it won’t be very useful.

The Lambda function I picked to optimize started out with a bundle size of 2.8mb. We are going to be tweaking a few parameters to see how we can optimize cold starts:

- How we are bundling

- Memory size

- Architecture – we currently use X86 for our Lambda architecture, but while we were deep diving on performance, we thought it was worth also looking into the Arm architecture, and seeing how it affected cold starts and billed durations

First things first: we want to get a baseline for performance in our current production environment, and then see how we can improve it going forward. Our production Lambda function is zipped to 2.8mb. It gets deployed as a 256mb memory function using X86 architecture runningthe Node.js18 runtime.

Testing with a few different configurations, these were the results with the 2.8mb bundle:

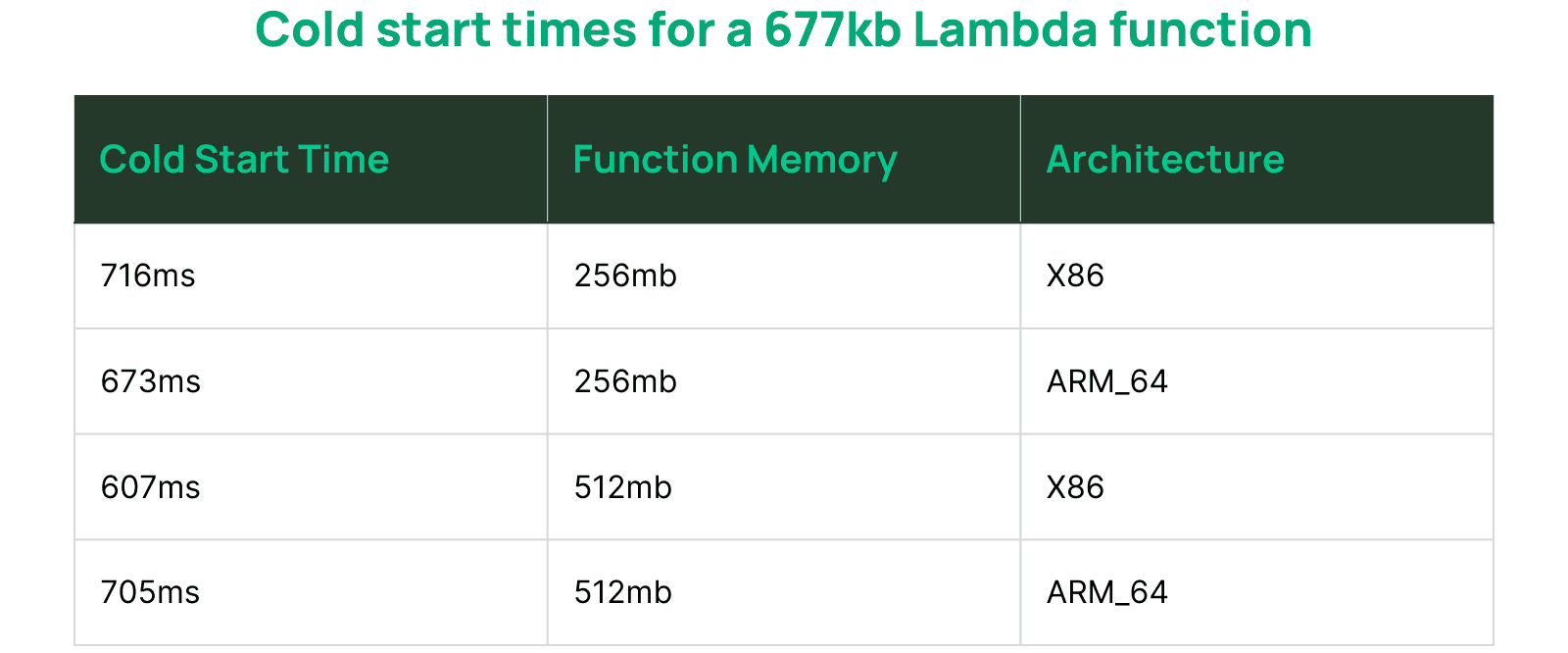

Now let’s use esbuild to bundle our Lambda function. The first thing to note is that the bundle size was reduced from 2.8mb → 677kb immediately. These are the results running the exact same tests with the 677kb bundle:

Cold start times were reduced significantly using esbuild. The billed duration for each run looks around the same as our current production runs, which makes sense since bundle size should really only affect cold start times.

The next thing I wanted to try is to minify our output file. This should, in theory, reduce some bundle size since it removes all the empty whitespace/redundant data inside of the output file file. To do this, we add the line minify: true to the build function in our esbuild config file:

build({

entryPoints,

bundle: true,

outdir: path.join(__dirname, outDir),

outbase: functionsDir,

platform: 'node',

sourcemap: 'inline',

write: true,

tsconfig: './tsconfig.json',

keepNames: true,

minify: true, // this line

}).catch(() => process.exit(1));The result of building the Lambda function is now 2.7mb. This was surprising! I was expecting that this would have reduced the bundle size more.

Next, let’s try externalizing the source maps. Source maps help link code with its minified counterpart. They are used for debugging—for example, to get line numbers for errors—and they can be removed for production. As the bundle size directly affects our performance, having a smaller bundle size outweighs bundling our application with inline sourcemaps. It’s worth noting that the serverless-esbuild library also does not bundle source maps inline with the Lambda asset.

To remove source maps from the output, change sourcemap: ‘inline’ to sourcemap: ‘external’. The output of our build folder now looks like:

dist

├── integrations-api

│ ├── handler.js

│ ├── handler.js.map

│ └── integrations-api.zip

└── webhooks

├── handler.js

├── handler.js.map

└── webhooks.zipWhere the .js.map files are the source map files, which are pulled out of the handler.js and therefore the *.zip file.

The result of the new bundled size is…590kb 😀. This is even smaller than what serverless-esbuild produces :w00t:!

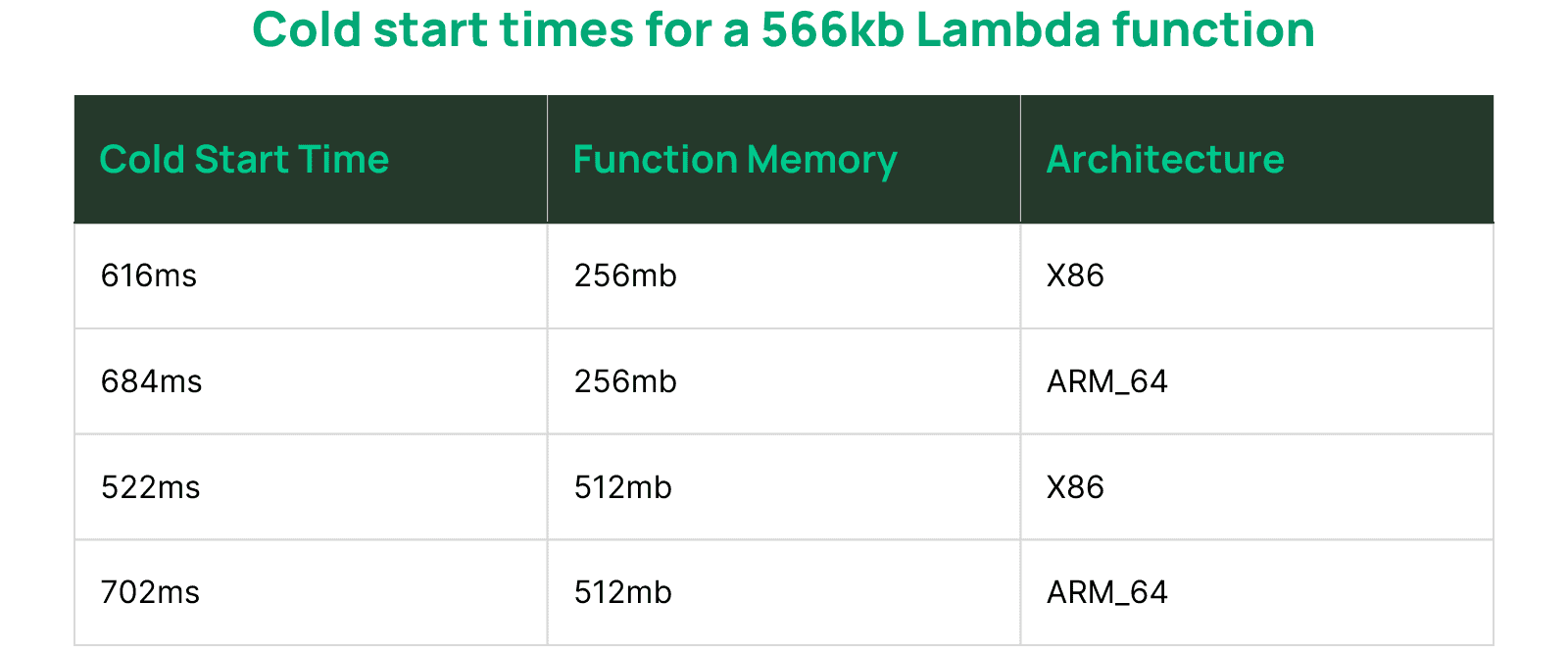

But can we go further? What if we set keepNames to false? This will tell esbuild to minify function and variable names. The result of building with keepNames: false is 566kb. Lets see how it performs in our tests using this 566kb bundle:

This is awesome! Using our existing Lambda configuration, we have reduced our cold start times from ~1 second to ~600ms, a 40% savings!

Throughout our Lambda functions we use the AWS SDK extensively. The AWS SDK is special since a version of it is included in the Lambda runtime. This allows us to externalize the aws-sdk from the Node.js package. This is a common optimization that I have read about. The Node.js Lambda runtime includes a version of the AWS SDK available at runtime, so in theory you don’t need to bundle it with the Lambda asset. Let’s try it.

To do this, we can add external: [‘@aws-sdk/*’] to the build parameters.

import * as fs from 'fs';

import * as path from 'path';

import {build} from 'esbuild';

const functionsDir = 'src';

const outDir = 'dist';

const entryPoints = fs

.readdirSync(path.join(__dirname, functionsDir))

.filter(entry => entry !== 'common')

.map(entry => `${functionsDir}/${entry}/handler.ts`);

build({

entryPoints,

bundle: true,

outdir: path.join(__dirname, outDir),

outbase: functionsDir,

platform: 'node',

sourcemap: 'external',

write: true,

minify: true,

tsconfig: './tsconfig.json',

keepNames: false,

external: ['@aws-sdk/*'], // adding this line here

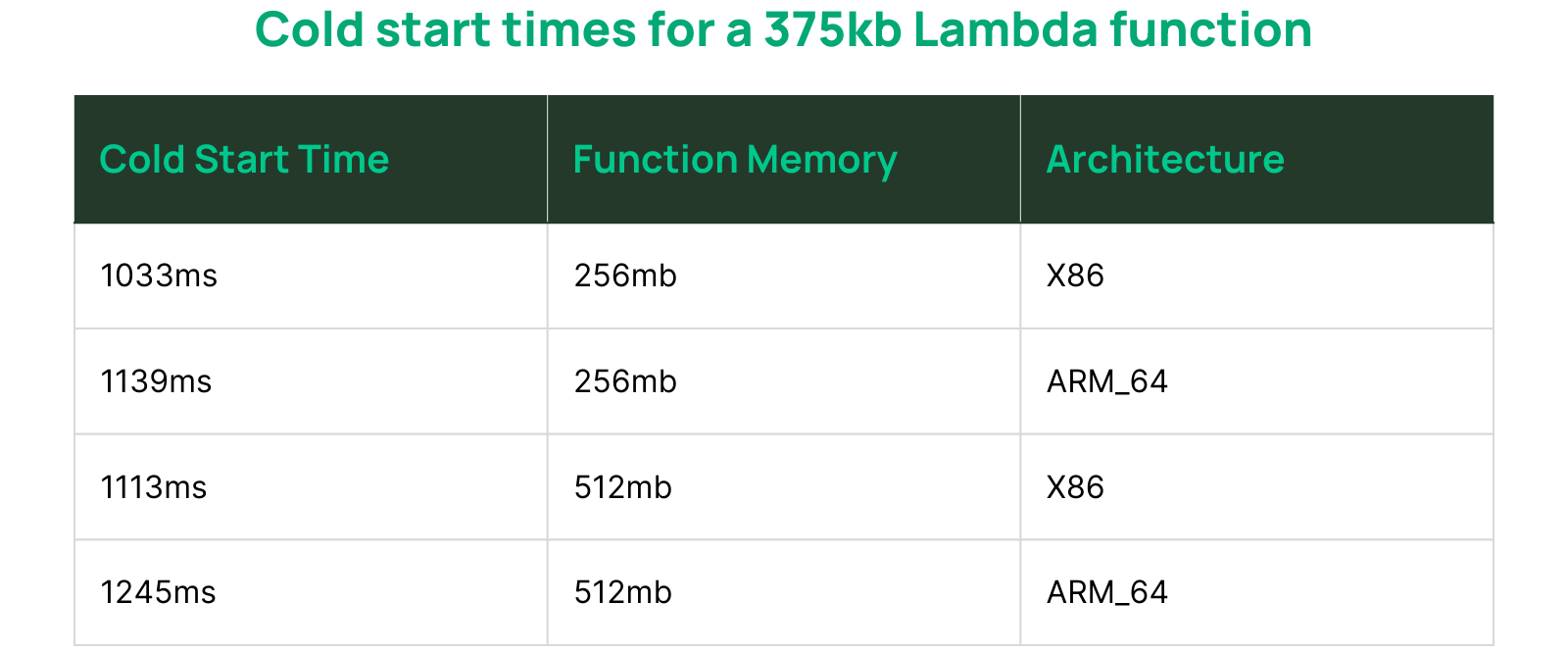

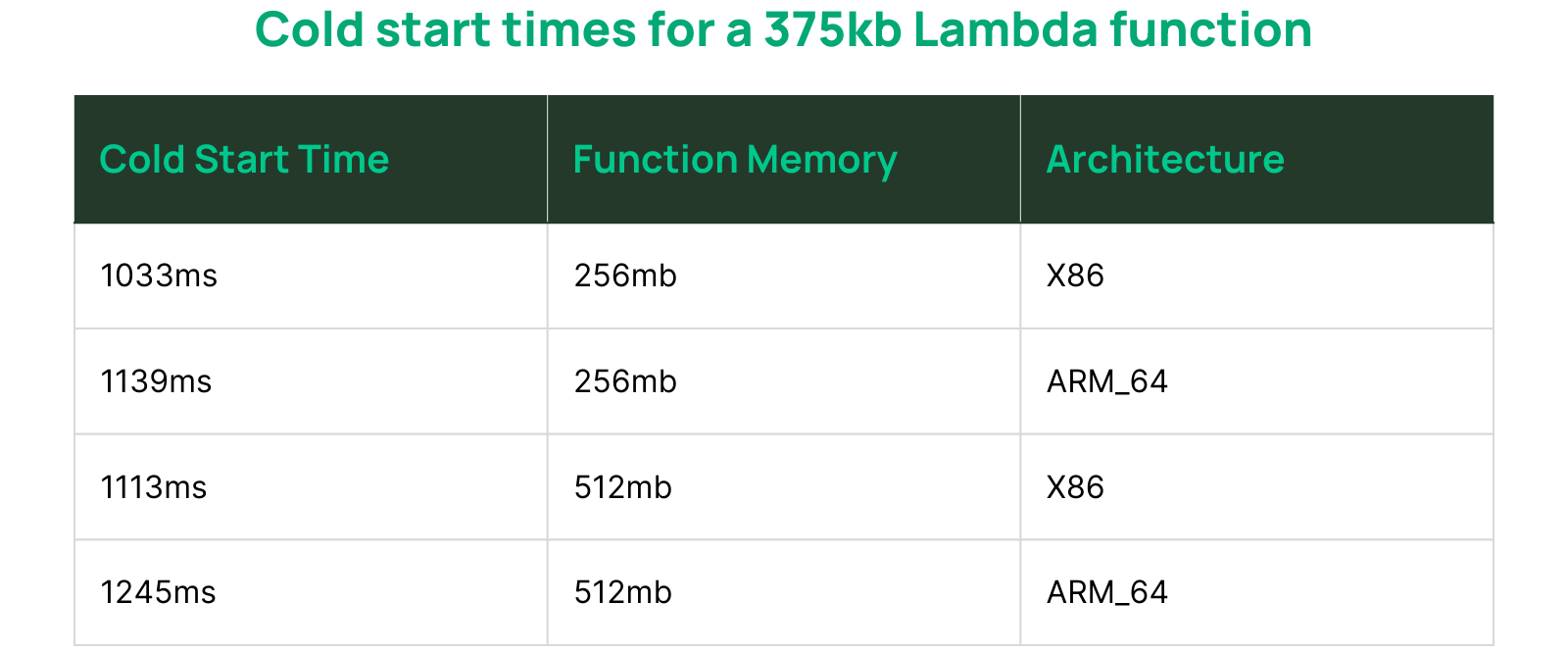

}).catch(() => process.exit(1));Now when we bundle our Lambda asset, it’s reduced down to 375kb 😱. Let’s see how it performs.

Interesting… Even though the bundle size was reduced significantly, it looks like cold start times went back to our original times – and then some. I don’t have a great explanation for this, other than there must be some dynamic linking going on at the time of cold start, linking the AWS SDK version that exists inside of the Lambda runtime with our code.

Since this doesn’t work, I think we can safely remove externalizing the aws-sdk from our build. This has the added benefit of:

- Being able to run the Lambda locally since our bundle includes all dependencies required to run.

- Being able to pin the AWS SDK to an explicit version, rather than relying on the version bundled with the Lambda runtime.

So here is our final esbuild configuration.

import * as fs from 'fs';

import * as path from 'path';

import {build} from 'esbuild';

const functionsDir = 'src';

const outDir = 'dist';

const entryPoints = fs

.readdirSync(path.join(__dirname, functionsDir))

.filter(entry => entry !== 'common')

.map(entry => `${functionsDir}/${entry}/handler.ts`);

build({

entryPoints,

bundle: true,

outdir: path.join(__dirname, outDir),

outbase: functionsDir,

platform: 'node',

sourcemap: 'external',

write: true,

tsconfig: './tsconfig.json',

minify: true,

keepNames: false,

}).catch(() => process.exit(1));Architecture

Throughout these tests we have been testing both x86 と arm_64 Lambda architectures to see if there was any difference between the two. The arm_64 architecture has been consistently slower than the x86 one. This was a bit surprising since we have benchmarked some backend workloads on EC2 that are faster on arm_64. However, we were consistently seeing a ~15% performance reduction on arm_64 inside of Lambda. Because of this, I am going to focus the remaining test on the x86 architecture.

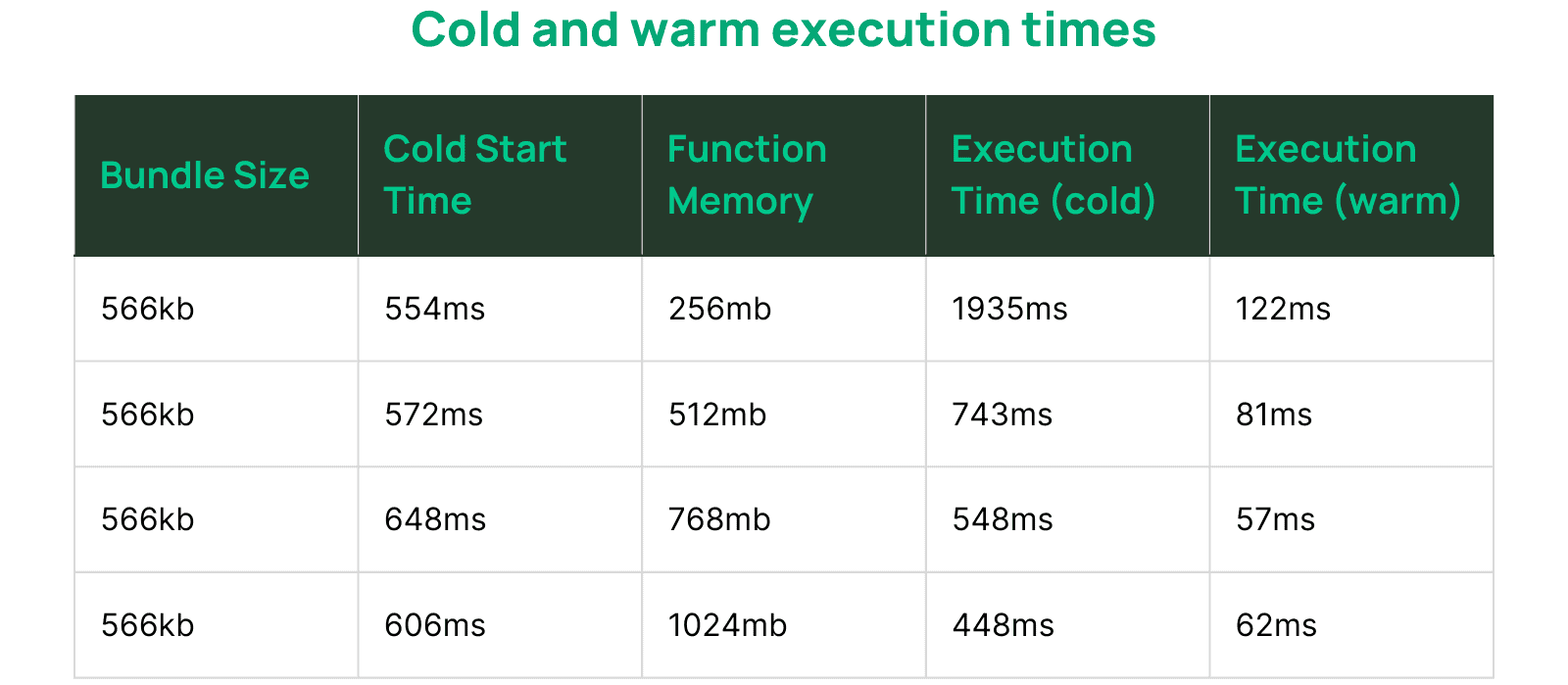

These last few tests are going to try optimizing our Lambda memory configuration. In this table, we are adding 2 new columns: Execution Time (cold) と Execution Time (warm). These will be used to help us determine if the amount of Lambda memory is limiting the execution in any way.

Based on these results, it looks like the sweet spot between performance and Lambda memory is right around 512mb → 768mb. I think for now we will increase our Lambda function memory to 512mb, and if we start seeing slowdowns during cold starts, we know the next step would be to increase the Lambda memory size 1 step up.

Conclusion

So that’s been the journey here at Momento as we improved our Lambda packaging and cold start times using esbuild. By minifying our code and externalizing our source maps, we reduced our cold start times by ~40%! We’ll be using this pattern in the future to keep our Lambda functions small and fast. It will also shorten developer cycles by providing a boilerplate template for standing up new multi-Lambda-function repos and adding additional functions to existing applications.

And after all this, I can’t *not* mention AJ Stuyvenberg—the king of cold starts. If you want to further reduce cold starts, definitely check out his article about identifying lazy loading dependencies!